A few days ago I promised you my post-Frascati thoughts on the Voynich Manuscript radiocarbon dating. Errrm… little did I know quite what I was letting myself in for. It’s been a fairly bumpy ride. 🙁

Just so you know, the starting point here isn’t ‘raw data’, strictly speaking. The fraction of radioactive carbon-14 remaining (as determined by the science) first needs to be adjusted to its effective 1950 value so that it can be cross-referenced against the various historical calibration tables, such as “IntCal09” etc. The “corrected fraction” value is therefore the fraction of radioactive carbon-14 that would have been remaining in the sample had it been sampled in 1950 rather than (in this case) 2009. Though annoying, this pre-processing stage is basically automatic and hence largely unremarkable.

So, the (nearly) raw Voynich data looks like this:

Folio / language / corrected fraction modern [standard deviation]

f8 / Herbal-A / 0.9409 [0.0044]

f26 / Herbal-B / 0.9380 [0.0041]

f47 / Herbal-A / 0.9389 [0.0041]

f68 / Cosmo-A / 0.9338 [0.0041]

What normally happens next is that these corrected fraction data are converted to an uncalibrated fake date BP (‘Before Present’, i.e. years before 1950), based purely on the theoretical radiocarbon half-life decay period: for example, the f8 sample would have an uncalibrated radiocarbon date of “490±37BP” (i.e. “1460±37”).

However, this is a both confusing and unhelpful aspect of the literature because we’re only really interested in the calibrated radiocarbon dates, as read off the curves painstakingly calibrated against several thousand years of tree rings; so I prefer to omit it. Hence in the following I stick to corrected fractional values (e.g. 0.9409) or their straightforward percentage equivalents (e.g. 94.09%): even though these are equivalent to uncalibrated radiocarbon dates, I feel that mixing two different kinds of radiocarbon dates within single sentences is far too prone to confusion and error. It’s hard enough already without making it any harder. 🙁

The problem with the calibration curves in the literature is that they aren’t ‘monotonic’, i.e. they kick up and down. This means that many individual (input) radiocarbon fraction observations end up yielding two or more parallel (output) date ranges, making using them as a basis for historical reasoning both tricky and frustrating.

Yet as Greg Hodgins described in his Frascati talk, radiocarbon daters are mainly in the business of disproving things rather than proving things. In this case, you might say that all radiocarbon dating has achieved is to finally disprove Wilfrid Voynich’s suggestion that Roger Bacon wrote the Voynich Manuscript… an hypothesis that hasn’t been genuinely proposed for a couple of decades or so.

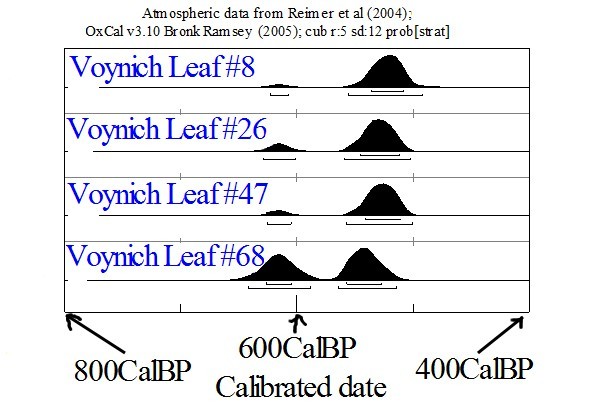

Of course, Voynich researchers are constantly looking out for ways in which they can use or combine contentious / subtle data to build better historical arguments: and so for them radiocarbon dating is merely one of many such datasets to be explored. In this instance, the science has produced four individual observations (for the four carefully treated vellum slivers), each with its own probability curve.

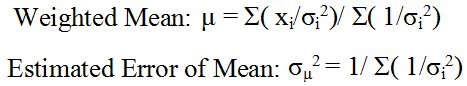

The obvious desire here is to find a way of reliably combining all four observations into a single, more reliable, composite meta-observation. The two specific formulae Greg Hodgins lists for doing this are:-

So, I built these formulae into a spreadsheet, yielding a resultant composite fractional value for all four of 0.93779785, with a standard deviation of 0.004169. I’m pretty certain this yields the headline date-range of 1404-1438 with 95% confidence (i.e. ±2 sigma) quoted just about everywhere since 2009. But… is it valid?

Well… as with almost everything in the statistical toolbox, I’m pretty sure that this requires that the underlying observations being merged exhibit ‘normality’ (i.e. that they broadly look like simple bell curves). Yet if you look at the four probabilistic dating curves, the earliest calibrated date (on f68) yields two distinct dating ‘humps’, whereas the latest calibrated date (on f8) has almost no chance of falling within the earlier dating hump. This means that the four distributions range from normal-like (with a single mean) to heteroscedastic (with multiple distinct means).

Now, the idea of having formulae to calculate weighted means and standard deviations is to combine a set of individual (yet distinct) populations being sampled into a single larger population, using the increased information content to get tighter constraints on the results. However, I’m not convinced that this is a valid assumption, because we are very likely sampling vellum taken from a number of different animal skins, very possibly produced under a variety of conditions at a number of different times.

Another problem is that we are trying to use probability distributions to do “double duty”, in that we often have a multiplicity of local means to choose between (and we can’t tell which sub-distribution any individual sample should belong to) as well as a kind of broadly normal-like distribution for each local mean. This is mixing scenario evaluation with probability evaluation, and both end up worse off for it.

A further problematic area here is that corrected fractional input values have a non-linear relationship with their output results, which means that a composite fractional mean will typically be different from a composite dating value.

A yet further problem is that we’re dealing with a very small number of samples, leaving any composite value susceptible to being excessively influenced by outliers.

As far as this last point goes, I have two specific concerns:

* Rene Zandbergen mentioned (and Greg confirmed) that one of the herbal bifolios was specifically selected for its thickness, in order (as I understand it) to try to give a reliable value after applying solvents. Yet when I examined the Voynich at the Beinecke back in 2006, there was a single bifolio in the whole manuscript that was significantly thicker than the others – in fact, it felt as though it had been made in a completely different way to the other vellum leaves. As I recall, it was not far from folio #50: was it f47? If that was selected, was it representative of the rest of the bifolios, or was it an outlier?

* The 2009 ORF documentary (around 44:36) shows Greg Hodgins slicing off a thin sliver from the edge of f68r3 (the ‘Pleiades’ panel), with the page apparently facing away from him. But if you look just a little closer at the scans, you’ll see that this is extremely close to a section of the page edge that has been very heavily handled over the years, far more so than much of the manuscript. This was also right at the edge of a multi-panel foldout, which raises the likelihood that it would have been close to an animal’s armpit. Personally, I would have instead looked for pristine sections of vellum that had no obvious evidence of heavy handling: picking the outside edge of f68 seems to be a mistake, possibly motivated more by ease of scientific access than by good historical practice.

As I said to Greg Hodgins in Frascati, my personal experience of stats is that it is almost impossible to design a statistical experiment properly: the shortcomings of what you’ve done typically only become apparent once you’ve tried to work with the data (i.e. once it’s too late to run it a second time). The greater my experience with stats has become, the more I hold this observation to be painfully self-evident: the real world causality and structure underlying the data you’re aiming to collect is almost without exception far trickier than you initially suspect – and I can see no good reason to believe that the Voynich Manuscript would be any kind of exception to this general rule.

I’m really not claiming to be some kind of statistical Zen Master here: rather, I’m just pointing out that if you want to make big claims for your statistical inferences, you really need to take an enormous amount of care about your experimental methodology and your inferential machinery – and right now I’m struggling to get even remotely close to the level of certainty claimed here. But perhaps I’ll have been convinced otherwise by the time I write Part Two… 🙂

Nick, the standard methods are fairly ‘standard’ world-wide. You could ask Greg for the code number of the one(s) he used, and then read the original. Most are public documents. Should help allay concerns about sampling methods, I hope.

Diane: in my opinion, it’s not as simple as the scientists (and documentary-makers) would have you believe. Basically, there’s a complex interaction between historical evidence and scientific hypotheses which I’m not convinced they’ve fully nailed down in this instance. It’s dead easy to misdesign stats experiments and to get stats inferences wrong – the scientific data collection part can end up being a relatively minor part of the overall process. 🙁

Good points, Nick… but in addition, in my opinion, whether or not the C14 tests, or the interpretation of the results from them, is dead on accurate or not… we still lack a good data base of the habits of vellum use: Exactly how far out from creation is “normal”, or even “rare”. Even rare cases of old vellum use open a wide door of possibilities. Most of this has so far been assumed, there is no significant data set. And in the two “tests of the tests” on vellum/parchment/paper, in ’72 and ’10, there are instances in which the data allows for vellum up to about 200 years old being used. And of course, considering the VMs tests accurate… which I assume at this point… many theories from scholarship out to imaginative still put the laying down of the ink at least 30+ years past the C14 dating.

Buy I agree with you, one way or the other, this is an issue which should be examined… even if one would not like to follow me out to the outer edges of dating… it seems there are still many questions to ask and be answered. It is not a done deal by any means, IMHO.

Rich: because of the way multiple ‘humps’ often appear within the probabilistic ‘envelope’ produced by radiocarbon dating curves, I think it always makes sense to try to combine scientific dating evidence with (for example) codicological, art historical, and palaeographical evidence… in fact, comparing radiocarbon dating evidence with palaeography dating evidence was a key part of Greg H’s slides in Frascati. For me, these evidences all combine to paint a persuasive picture of multiple quirations and rebindings pretty much all the way from “Vellum to Prague”, so I’m reasonably comfortable with the overall dating here. My post here is more about exploring the precision of the the headline 1404-1438 radiocarbon dating range, because I have specific doubts about two of the samples – which, out of a set of four observations, is actually quite a lot. 🙁

On this subject, when Wolfgang Lechner was compiling his list of “to dos”, I suggested C14 dating of the VMs cover. Of course this is a later addition… but the actually timing of the re-binding itself would be an important bit of knowledge, I think. Did Kircher do it? Becx? Did it happen in one of the dark periods of ownership? It goes hand in hand, I think, with your extensive work on identifying the reordering of the manuscript, to know when this may have been done.

“…because I have specific doubts about two of the samples – which, out of a set of four observations, is actually quite a lot.”

… and yes, I agree with you, and your points on this.

Rich: it’s a good question, but I’d like to get the vellum dating locked down first. 😉

Nick, Rich, and Diane:

Does anyone know anything about the publishers of Kircher’s works? They would have had to handle/dismantle the ms in order for their engravers to make the printing press plates? Two references are presented by Godwin: “Schott and others”, and Johann Stephen Kestler.

Y’never know what you might find in the “attic” of some publisher that has been in the family business for centuries.

Hi Nick,

just to clarify about the one ‘thicker’ page. The pages to be tested were selected just to allow the largest possble range of results. After all, sheets could have come from different sources, and have been written at different times. Thus, one herbal A and one herbal B page were selected (these may/might have been written at different times).

The thicker page could be from a different lot. Since Jim Reeds already reported this many years ago, I took notes of it during my own visit in 1999, and used these to recommend fol.47. Cleaning of the sample was not an issue in this decision.

The selection of fol.8 was because this has the Tepenec signature and it is good practice to use samples that have other dating information.

Cheers, Rene

Those individual folio distributions look approximately Gaussian to me, so why the angst about combining them? The #68 lower peak is inconsistent with the others, so if included would tend to skew the mean of the means downwards (but personally I would exclude it from the calculation – wouldn’t you?).

I’m not sure if it is your intention in this post to throw some doubt on the validity of the method, or on the validity of the result?

Julian

Rene: I strongly suspect that therein lies the root of the paradox – even though the leaves to be sampled were selected to be as different from each other as possible, the maths used to combine the four results relies on them being as similar as possible (but without actually being identical). Moreover, if the thick folio was from (as I recall) the only superthick bifolio in the whole manuscript, it is almost by definition a codicological outlier, i.e. unrepresentative of the rest of the bifolios.

I would agree that selecting a wide range of pages for sampling has helped to build a mean date range that is good for constructing an overall eliminative case – however, the flipside is that this same heterogeneity undermines the assumption of shared population normality that the maths relies upon when merging the four results into one mega-result. It seems to me that you can, given so few observations to work with, design the overall statistical experiment either for a more reliable mean or for tighter precision, but not for both at the same time. A bit like a codicological version of Heisenberg’s Uncertainty Principle! 😉

Incidentally, it is perhaps also a little puzzling that f8 has the latest date of all four samples despite being attached to the very first (and hence one might suspect the earliest) folio f1. At the same time, a separate angle on f1r is that I would expect the titled paragraphs to be describing different ‘books’ contained within the overall work (indeed, possibly even Books A, B, and C now I mention it 🙂 ), yet these paragraphs all seem to have been written in one go, rather than added sequentially over time. Perhaps this f1-f8 bifolio was damaged during the writing process and was replaced with a fresh bifolio near the end? Might the f1-f8 bifolio therefore be a copy of a damaged enciphered bifolio? Something to think about! 🙂

Julian: as I mentioned in my reply to Rene’s comment, I’m pretty sure that the restricted set of sampling done here could have been designed to approximate a reliable mean or a tighter precision, but not both at the same time. The issue I’m flagging here is that the results have been widely reported as if both outcomes have been achieved simultaneously using just four samples, which I don’t currently believe is actually possible.

Hello! First of all, this site is great! I’m completely ignorant when it comes to C14 dating, so here go the possibly dumb questions:

If the first sample fraction is 0409,why are you subtracting 490 from the 1950 date? Shouldn’t it be 409 years? Wondering if that is a typo or some part of the equation that I don’t understand…Can you explain how that works please? Could you please also translate the approximate C14 date for each sample as opposed to the average for all of them? Beyond this, I am also interested in why there is such a gap between the C14 result in the various samples: shouldn’t they be more similar? Does this mean that different parts of the book were written/copied at different times, then bound together?

If these questions have already been answered elsewhere, I’d be happy with a link! Thanks and congrats for your wonderful website!

Hi Nick,

on the combination of data, one can certainly argue both ways. Note that the decision to combine all four samples was made after seeing that the four standard distributions (in the percentage domain) all overlapped. The ‘outlier’ f68, is still only 1.5 sigma or so away from the average, and 1.5 is not really an outlier, hence the quotation marks (but the point about the few sample applies indeed).

Finally, since our observation granularity is of the order of 20 years at the very best, we don’t know if the book(s) was/were written at once, or with gaps of several years.

re carbon dating. I’ve just seen this in a report on the ‘other’ conference:

“Carbon dating at the National Science Foundation—Arizona Accelerator Mass Spectrometry (AMS) Laboratory at the University of Arizona revealed that the parchment used for the folios dated to the 1450’s. Analyses by McCrone Associates suggest the drawing and writing inks are from the same period.”

now maybe a difference of perhaps as little as 15 yrs (or as many as 30) doesn’t sound like much but at that period in European history it makes one huge difference in terms of art history, exploration by Europeans.. all kind of things. I do hope this is just sloppy reporting and not a revision of the original report!

http://www.conservators-converse.org/2012/05/40th-annual-meeting-bpg-afternoon-session-may-9-3/

btw – I agree with Julian about #68, even assuming that it was by reference to the Standard Method, and with allowance for duplication (not just by reference to Rene’s comments) that the samples were selected.

But maybe that explains the seeming revision of the dates in that post from the Conservators.. maybe.

Diane: I suspect it’s just, ummm, imprecise reporting. I agree with you and Julian that f68 does appear inconsistent, but (as Greg Hodgins notes) it’s not inconsistent by very much. Hard to be sure what to say for the best… =:-o

Nick – quite right. Yale confirms that

“..that is a reporter’s error. According to Dr. Greg Hodgins of the University of Arizona, who conducted the carbon dating tests on the VMS, the parchment making up the manuscript can be dated, with 95% accuracy, to the years 1404-1438, and with 68% accuracy to 1411-1430.”

Nick, I followed up on your reference to “the animal’s armpit”:

Yesterday, I found a neat online article: “New Evidence of Note-Taking in the Medieval Classroom” http://medievalfragments.wordpress.com

This may come up as a link. For now, I only want to point out that the writer talks about using strips of parchment/vellum that had been cut away after the skin had been removed from the stretchers: the “waste” was either used as “notepaper/scratchpaper” or was consigned to the “boiling pot” to be made into glue.

So, might the piece of vellum you discuss as “being near the animal’s armpit” have been a “borderline” quality piece of parchment that was consigned to use as “note-taking” material rather than a “finished” scriptorium product?

I still believe that the script, itself, is Beneventan miniscule! I’ll give you a ref for that belief in another post.

addendum: the link to which I just referred, is dated 2012/06/01

A “miniscule” correction to my earlier posts:

Not necessarily “Beneventan” miniscule as far as the VMss script is concerned, but rather “Southern Italy” miniscule. It probably still depends on whether the VMss was produced in a monastery, institute of learning, or even a school such as “Cues” of Paris. The script is still uncial, maybe Oscian/Volscian/Vellitraen uncial……and certain characters do appear in Beneventan scripts.

The “key” to a partial clearing of the confusion re the script can be found at the website “Omniglot”.

What we seem to have then are gathered excerpts or single sheets, probably obtained from relics(?) of a single scriptorium and all made (despite their very different cut-marks in the binding) within a relatively short period. Otherwise, surely, we’d have more than the few different hands that have been identified. Fascinating glimpse into the circumstances ..

Nick,

I owe you an apology. Unless Rene consulted with UAri in advance and picked samples using an appropriate standard method – still waiting to find out – then even giving Greg 100% for collection and analysis still means we can only consider dated those folios which were chosen to suit the interest of one of us, hobbyists though we are.

In scientific terms, it means a sample so biased that its results, no matter how accurate for those four samples, can be taken as a dating only for those folios.

Pity such a vital test wasn’t left to the specialists, but then they hadn’t the money I suppose.

Helps explain why there hasn’t been a scientific paper written, and perhaps too why the Beinecke has so far not changed the dates given on its introduction.

So, I guess I should offer them an apology on that score, too.

PS – In response to my question on the mailing list about which folios had been tested, Rene hasn’t yet given an answer, but he did say that information which you’ve offered here was not accurate. I assume (since that was my question) that he means the folio numbers you cite are not those which Rene chose, after all.

Diane: since this post (more than a year ago), I’ve spent more time working with the radiocarbon dating data.

What seems to be the case is that the two “humps” in the curve (the twin heteroscadistic mean values) offer two alternate bubbles of historic possibility, but we are reasonably able to discount the earlier hump on probabilistic grounds.

Hence the values going into the calculations should be the means and distributions for the right hand humps only. This wasn’t entirely obvious to me a year ago, but I’ve pretty much got there now.

However, I have to say I still have strong doubts about the Leaf #68 sample… but that’s a discussion for another day.

Diane: as I recall, Greg Hodgins said that the samples were carefully selected following consultation with both Rene and domain experts. I am happy with the folios chosen, but I just wish the sliver on f68 had been taken far away from the area of handling discoloration.

The sampling lacked rigor in its initial stage.

I expect Greg meant he was happy that the samples would yield valid results in the lab. About actual collection, processing and analysis by Greg himself, I’ve no issue. Sure it was meticulous.

Negative:

Results were all gained from quires taken from only the upper half of the stack. All but one ‘page’ concerns the same subject (botanical) and might be assumed likely made at much the same time, anyway.

Proper randomised sampling would include a majority of plant-pictures anyway, I expect – but not all from the top of the stack.

Positive side:

consistency in folio size – apparently.

If one day Yale can spare an in-house codicologist and/or conservator, our having a full codicological description will be a joyful moment.

I mean one referring to such things as hair-sides vs skin sides, variations in parchment finish, any signs of trimming and so on. And formal identification of variations and probable locality/ -ies.

I’m relying on various Voynicheros’ personal memories – but happily none suggests that any of the manuscript’s quires were trimmed.

If that’s true, it’s a good thing: Ms’ dimensions (225 mmx 160mm) are a little unusual and if all of them are naturally so (untrimmed) it adds to the possibiility that all these quires came from a consistent source, possibly in the form of pre- prepared gatherings rather than single parchment sheets.

It’s not reason enough to assert that the dates obtained for three botanical sheets and one astronomical sheet apply to every section in the whole ms, but it is reason to hope.

Diane: the samples were consciously chosen rather than randomised – the Beinecke curators only allowed them to take four samples, and so they used other criteria.

Personally, because of the risk of accidentally picking the same animal skin twice, I’d have only picked one Herbal-A folio and one Herbal-B folio, but they had their reasons. Maybe some day these will be written up properly… or maybe they won’t.

sure – but with this sort of analysis results are as valid as the samples are representative. So we have a pretty firm date for the botanical section and one other sheet.

If the manuscript had never been re-bound or disordered, or if it were a printed book, it wouldn’t matter so much.

Trouble is, a medieval florilegium can contain quires date in the worst-case several centuries apart.

The Tepenec page was a good control to choose; I’d have picked that too.

D

Nick,

May I reproduce the first diagram above? I’d like to show it side by side with one of Julian Bunn’s if I may.

Diane: sure, no problem. By the way, re-reading the post and all the comments, everything there still stands. 🙂

.. does it now? 😀

and thank you.

Diane: let me know when you put your post on voynichimagery…

Nick, it’s scheduled for the 19th of this month. If you like, I can send you a preview to vet. I don’t know that it will make so much sense out of context. It’s #4 in a series that is a basic guide to the way a conservator might evaluate the manuscript: codicology but not much overlap with your work, I think. Not so far, anyway.

I’m enjoying these overview posts. It’s a good way to see that other people’s work is more widely advertised. I’m surprised by how little you see by way of citations of Bunn’s analyses or your textual codicology.

Perhaps someone’s next Voynich book should be called the Maze of Voynich-eriana.